Serviços

IA

Dados

Engenharia de Produto

Automação

Design de Experiência

ERP CRM

Nuvem

IoT

Serviços de Talento

Dados

Estratégia de Dados

Governança de Dados

Gerenciamento de Dados

Engenharia de Dados

Análises Avançadas

Engenharia de Produto Digital (DPE)

Desenvolvimento de Aplicações

Modernização de Aplicações

DevOps

Estratégia de Plataformas de Produto

Sustentação e Suporte de Produto

Engenharia de Qualidade

Automação Inteligente

Governança Digital com IA

RPA (Automação Robótica de Processos)

Parceiros de Automação

Design de Experiência

UI/UX (Interface e Experiência do Usuário)

Ideação e Inovação

Serviços de Design

Design como Serviço

Nuvem

Engenharia de nuvem

Migração para nuvem

Infraestrutura digital

Segurança digital

Espaços de trabalho digitais

Computação periférica

Infraestrutura como serviço

IoT (Internet das Coisas)

Desenvolvimento de Plataformas

Desenvolvimento de Backend em Nuvem

Suporte à Infraestrutura

Gerenciamento de Dados de Dispositivos

IA

Se você está começando ou avançando com IA, a Marlabs oferece a expertise necessária para maximizar seu valor empresarial. Implementamos soluções de IA de forma a liberar as pessoas para trabalhos mais impactantes, ao mesmo tempo em que minimizamos erros humanos em processos repetitivos.

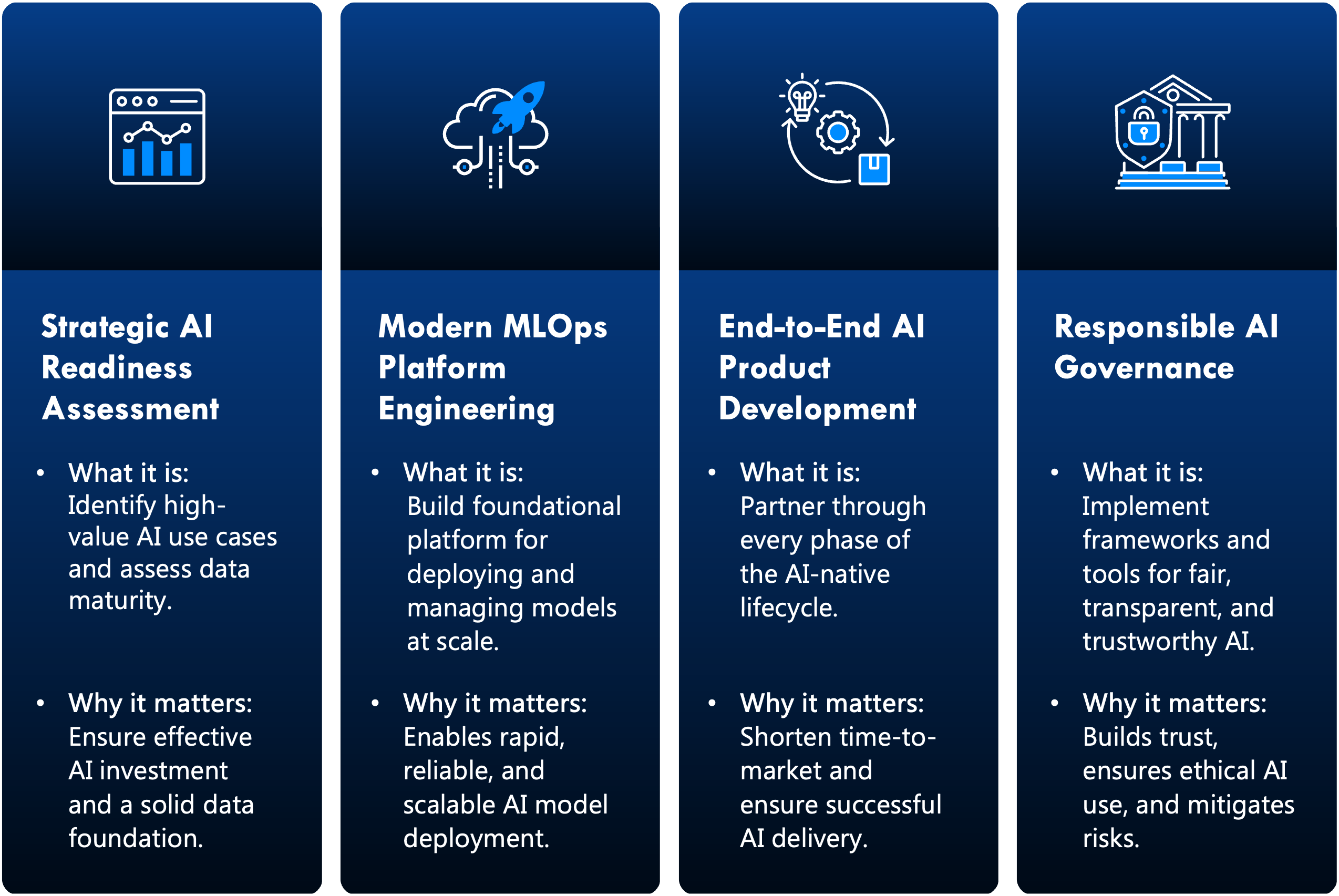

Descoberta e Estratégia em IA

Seja você entusiasmado ou cauteloso quanto à implementação de IA, nosso serviço de descoberta é o ponto de partida ideal. Este programa abrangente fornece as ferramentas para navegar no mundo da IA, entender seus limites e identificar como ela pode transformar seu negócio.

IA agente e interativa

No centro do aumento da força de trabalho digital, a IA agente está redefinindo as organizações. Criamos agentes de IA que entendem sua organização, permitindo que eles aumentem a experiência e o engajamento do cliente, melhorem a tomada de decisões internas, aumentem a produtividade, resolvam problemas de falta de pessoal e muito mais.

Desenvolvimento de IA

A IA cognitiva pode descobrir padrões em seus dados que revelam possibilidades de otimização e inovação com mais rapidez e precisão do que seria possível de outra forma. Temos a experiência necessária para implementar a IA com as barreiras adequadas para obter resultados significativos e rápidos em sua busca por conhecimento.

Governança de IA

Tornar-se uma organização baseada em IA exige confiança em grande escala nas soluções de IA implementadas em toda a sua empresa. Na Marlabs, incorporamos a governança em tudo o que fazemos com políticas claras, consistentes e reutilizáveis, juntamente com supervisão humana e pontos de verificação intencionais.

Transformação da IA

Desde avaliar a prontidão da IA até alcançar a excelência em IA, ajudamos as organizações a se tornarem capacitadas pela IA por meio de uma transformação estratégica e significativa. Com nosso AI Evolution Framework, nossa equipe serve como uma rede de segurança para ajudar você a criar estratégias significativas, evitar erros comuns e aumentar sua força de trabalho com soluções e agentes de IA.

Dados

Nossa equipe tem décadas de experiência em projetos sustentáveis e orientados por valor em dados e analytics. Esteja você deixando de usar planilhas departamentais ou buscando arquitetura de dados de ponta, nossos resultados são comprovados.

Estratégia de Dados

Cada passo da jornada de dados dos nossos clientes deve ter significado e impacto. Por isso, consideramos mais que dados: avaliamos tecnologia, processos, pessoas, cultura e adoção pelas equipes para impulsionar decisões com base em dados.

Governança de Dados

Manter os dados limpos, consistentes e em conformidade é essencial para gerar confiança. À medida que o universo de dados cresce, isso se torna mais desafiador — e mais importante. Estruturamos seus dados para atender seus requisitos e metas de negócio.

Gestão de Dados

Ajudamos você a administrar seus dados com uma fonte confiável e unificada de verdade para a organização, além de quebrar silos e promover uma cultura de alfabetização em dados.

Engenharia de Dados

Tornar sua estratégia de dados uma realidade é uma tarefa complexa. Com uma sólida história em migração de dados, engenharia de arquitetura e habilitação de analytics, aceleramos seu sucesso rumo a decisões mais rápidas e baseadas em dados.

Análise Avançada

Exploramos todo o potencial dos seus dados, identificando anomalias, prevendo necessidades dos clientes, otimizando recursos e muito mais — tudo com foco em gerar insights acessíveis e aplicáveis para sua equipe.

Digital Product Engineering (DPE)

Marlabs’ tailored engineering solutions create intuitive digital experiences with high-performing software. We engineer products to be user-centric and to continuously add value in an age where user needs and technological possibilities are ever-changing.

Application Development

At Marlabs, we’re experts in creating innovative, future-ready application solutions. We partner with you to ensure your apps are maintainable and user-centric. As digital needs evolve, we work to continuously deliver new value through iterative development.

Application Modernization

Struggling with out-of-date applications? Looking to build a competitive edge with applications that meets customer and employee needs for automation, AI, and customization? Either way, our team is ready to help you make the transformation into the digital, cloud-focused age.

DevOps

Our DevOps services bring together your teams for software development and IT operations to deliver fast, secure, and reliable software. By orchestrating people, processes, and platforms, we establish clear and collaborative integration and automation solutions.

Product & Platform Strategy

Our scalable and repeatable framework aligns business vision with user needs and technical capabilities. By keeping the whole technology ecosystem in mind and embedding AI directly into the strategy, we create future-ready architectures that are secure and adaptable.

Product Sustenance & Support

Marlabs’ product sustenance and support focuses on anticipating and evolving with growing organizational and customer needs—not just reacting to issues. We make sure your products are always available, always secure, and always optimized with zero business disruption.

Quality Engineering

More than just traditional software testing, Marlabs’ quality engineering relies on cutting-edge, AI-driven testing methodologies and automation frameworks. From initial strategy to continuous improvement, we build quality engineering into every step of product engineering.

Intelligent Automation

As experts in intelligent automation, we take automating tasks to the next level. Our team can help you automate even unstructured repetitive tasks using intelligent and AI-power automation. In doing so, we release your team to more high-value tasks.

IA Digital Governance

We help you take control of your processes by automating governance for security, consistency, and accuracy. By leveraging AI and machine learning, our intelligent automation solutions can provide unparalleled integrity in your data and processes.

RPA

With our robotic process automation (RPA) solutions, Marlabs reduces human error in repetitive processes. In doing so, we enable your people to spend their time and energy on more meaningful tasks. Then, by combining AI and automation, we take your processes to the next level.

Automation Partners

Our team has extensive experience with a variety of automation platforms. While we partner with major industry players to provide the best service possible, we also custom fit all our solutions to our clients’ business needs and objectives.

Experience Design

In the age of digital transformation, human-centered design has never been more important. Whatever stage you’re at, whether its initial ideation or modernization improvements, our team can help build intuitive solutions that drive your business forward.

UI / UX

Our user interface (UI) and user experience (UX) solutions focus on the entire customer journey. We focus on helping you build trust with both internal and external users by providing them with natural and frustration-free interactions with your products.

Ideation & Innovation

Do you know there’s a market need, but you’re not sure what would fill it? Do you have an idea of what you want to do, but you’re not sure how to execute? Our expert consultants use their technical and industry knowledge to guide you through vision casting and design preparation.

Design Services

From ideation and planning to creation and refinement, our team offers services through the full design lifecycle. We develop comprehensive, creative, and thoroughly tested design products that incorporate all your business and customer needs.

Design as a Service

Our design-as-a-service offering takes a flexible and scalable approach to design initiatives. We provide the design support you need, when you need it, so that you can tackle projects with confidence.

ERP e CRM

Com nossas soluções de ERP e CRM, você capacita sua organização como sempre desejou. Migrando ou modernizando, criamos plataformas estratégicas que servem melhor a você e a seus clientes.

Migração de ERP

Pronto para migrar para sistemas empresariais de nova geração? Nossos especialistas garantem transformações digitais ágeis, confiáveis e centradas no usuário.

Modernização de ERP

Sistemas poderosos, mas desatualizados, podem travar seu crescimento. Modernizamos seu ERP para entregar insights em tempo real, experiências intuitivas e inovações com IA.

Implementação e Integração de CRM

Com foco estratégico em ROI e receita, nossa equipe garante não só a implantação, mas também adoção do CRM, com governança, treinamento e automação inteligente integrados.

Nuvem

À medida que o mundo se torna cada vez mais digital, as infraestruturas em nuvem nunca foram tão importantes. Se você estiver migrando para um novo sistema, implementando computação de ponta ou aumentando sua segurança digital, nossa equipe pode ajudá-lo a arquitetar, migrar, otimizar e proteger seus ambientes de nuvem com confiança.

Engenharia de nuvem

Libere todo o potencial das arquiteturas nativas da nuvem com soluções específicas que se adaptam à evolução de sua empresa. Na Marlabs, nossos pipelines de DevOps orientados por automação garantem entrega rápida e ajuste contínuo de desempenho, mantendo você ágil em um cenário competitivo.

Migração para nuvem

Não deixe que a complexidade da migração diminua suas ambições digitais. Por meio de roteiros personalizados e reduzidos aos riscos, fazemos a transição perfeita de suas cargas de trabalho para o ambiente de nuvem ideal para garantir o mínimo de interrupção nos negócios.

Infraestrutura digital

Modernize seu backbone de TI para realidades multinuvem, incluindo redes, armazenamento e computação que escalam sob demanda. Com planejamento inteligente de capacidade, monitoramento orientado por IA e manutenção e otimização 24 horas por dia, 7 dias por semana, oferecemos a confiabilidade, a flexibilidade e a eficiência de que suas operações digitais críticas precisam.

Segurança digital

Com os riscos de segurança mais altos do que nunca, a detecção proativa de ameaças e os controles de confiança zero não são negociáveis. Nossa experiência em estruturas de segurança nativas em nuvem e gerenciamento proativo de ameaças garante a conformidade e cria uma confiança inabalável.

Espaços de trabalho digitais

Obtenha a verdadeira flexibilidade da força de trabalho por meio de desktops e aplicativos virtuais seguros e de alto desempenho acessíveis em qualquer lugar. Simplifique o gerenciamento, aprimore a segurança e aumente a produtividade, ao mesmo tempo em que permite uma experiência de usuário perfeita em toda a sua empresa.

Computação periférica

Implemente a computação e a análise mais perto de suas fontes de dados, permitindo insights em tempo real e tomadas de decisão mais rápidas. Ajudamos a incorporar monitoramento contínuo, governança de identidade e respostas automatizadas em todo o seu ambiente de nuvem, equilibrando a defesa rígida com o acesso sem atritos.

Infraestrutura como serviço

Livre-se da carga de hardware e faça parceria conosco para acessar recursos de computação, armazenamento e rede sob demanda. Nossos serviços gerenciados oferecem escalabilidade instantânea, resiliência aprimorada e custos previsíveis para suas principais necessidades.

IoT (Internet das Coisas)

Quer transformar dispositivos inteligentes em ativos conectados e escaláveis? Criamos soluções IoT personalizadas para oferecer insights em tempo real, automatizar operações, reduzir custos e melhorar a experiência do cliente.

Desenvolvimento de Plataformas

Desenvolvemos o centro de controle do seu ecossistema IoT: software, ferramentas e funcionalidades para conectar, monitorar e gerenciar todos os componentes.

Desenvolvimento de Backend em Nuvem

Nossos engenheiros de nuvem constroem backends de alta performance, confiáveis, seguros e em tempo real — do recebimento à análise e otimização de custos.

Suporte de Infraestrutura

Nossos serviços de infraestrutura garantem a base necessária para sua solução IoT: conectividade, monitoramento de desempenho e resiliência 24/7.

Gestão de Dados de Dispositivos

Capturamos, organizamos e analisamos seus dados em tempo real. Com analytics e machine learning, revelamos insights relevantes no momento certo.

Serviços de Talentos

Nossos consultores de recrutamento conhecem as necessidades organizacionais e estruturam a estratégia ideal para preenchê-las. Com quase 30 anos de experiência, entregamos soluções ágeis e eficazes.

Soluções de Talento

Com mais de 10 mil contratações realizadas, somos especialistas em identificar os melhores talentos para as necessidades da sua organização — com fit técnico e cultural.

Ofertas de Talento

Oferecemos soluções completas: reforçamos operações de RH, recrutamos talentos específicos por setor e lideramos iniciativas com IA para ampliação da força de trabalho digital. Também planejamos estratégias de longo prazo com laboratórios de excelência em talento.

Parcerias de Talento

Nossa abordagem vai além de preencher vagas. Trabalhamos valores como diversidade, inclusão e equidade para promover inovação, engajamento e sucesso sustentável com equipes representativas.

Ciências da Vida (Life Science)

Com mais de 20 anos de experiência no setor, a Marlabs colabora com seus clientes para acelerar e inovar processos clínicos. Garantindo segurança e conformidade, nossa equipe ajuda no desenvolvimento de tecnologias e produtos que mudam vidas, aumentando sua competitividade no mercado.

Saúde (Healthcare)

Há mais de 20 anos, a Marlabs auxilia organizações de saúde a oferecer cuidados de qualidade aos pacientes, ao mesmo tempo em que melhora os resultados operacionais e financeiros. Com dados confiáveis e tecnologia, apoiamos agendamentos, decisões clínicas, relatórios financeiros, cuidados personalizados e muito mais.

Serviços Financeiros (Financial Services)

A Marlabs tem uma sólida trajetória de apoio a clientes do setor financeiro para melhor atender e alcançar seus públicos. Ao explorar todo o potencial dos dados organizacionais e dos clientes, impulsionamos um crescimento seguro e voltado para o futuro, com uso de analytics, IA e engenharia de produtos digitais.

Manufatura (Manufacturing)

Com a crescente pressão pela modernização, fabricantes buscam a Marlabs para desenvolver soluções digitais sustentáveis voltadas à otimização de performance e gestão da cadeia de suprimentos. Por meio de gestão de dados, infraestruturas digitais, dispositivos IoT e analytics preditivo, ajudamos você a se manter competitivo.

Telecomunicações e Mídia (Telecom & Media)

À medida que a personalização e a digitalização se tornam demandas essenciais, a Marlabs apoia empresas de telecomunicações e mídia a confiar em seus dados. Com isso, nossos clientes enfrentam menos riscos de segurança, reduzem a rotatividade de clientes e encontram maneiras inovadoras de engajá-los.

Databricks

Organizações de diversos setores liberam o poder dos seus dados com a Databricks. Suas soluções inovadoras escalam conforme as necessidades do negócio. A equipe certificada da Marlabs em Databricks oferece suporte completo em migração, governança e implementação, cultivando dados e análises confiáveis.

Salesforce

Como parceira Platinum Cloud Alliance da Salesforce, a Marlabs ajuda você a extrair o máximo valor dos seus investimentos na plataforma. Desde a implementação inicial e integração até adições com IA e manutenção, nossa equipe está posicionada de forma única para apoiar todas as suas iniciativas com Salesforce.

Amazon AWS

Como parte da AWS Partner Network (APN), os especialistas certificados da Marlabs atendem a todas as suas necessidades com a AWS. Fornecemos soluções escaláveis e preparadas para o futuro em dados, infraestrutura digital, migração para a nuvem, desenvolvimento de aplicações, implementações de IA e mais.

Microsoft

Parceira oficial do Microsoft Fabric, a Marlabs implementa soluções com Azure, Power BI, Data Factory, Power Apps e Copilot. Há mais de 18 anos, conduzimos clientes da estratégia à execução em todas as demandas Microsoft, capacitando suas equipes internas a adotar completamente as soluções.

Snowflake

Com uma equipe de arquitetos certificados, a Marlabs está especialmente qualificada para acelerar o tempo de retorno sobre investimento com o data warehouse da Snowflake. Nossos especialistas colaboram para criar soluções holísticas e bem integradas, alinhadas aos objetivos de negócio.

Perfecter.ai

Como parceira oficial da Perfecter.ai, a Marlabs utiliza inteligência autônoma por meio dos agentes de IA da plataforma. Essas soluções são especialmente reconhecidas no setor financeiro por oferecerem fluxos de trabalho seguros e automáticos que reduzem o esforço manual em até 70%.

Profisee

A Marlabs é parceira certificada da Profisee, referência em software de gestão de dados. Suas soluções são projetadas para integração com ambientes Microsoft, e nossos especialistas treinados cruzadamente são qualificados para tornar sua estratégia de dados uma realidade com essas integrações.

ArchLynk SAP

Com engenheiros certificados em Databricks e parceria com especialistas SAP da ArchLynk, a Marlabs conecta dados operacionais e da cadeia de suprimentos no SAP às capacidades inovadoras de ciência de dados e IA da Databricks. Juntos, Marlabs e ArchLynk oferecem uma visão 360° dos dados operacionais e empresariais, com atualizações em tempo real.

Infor

Infor is a global leader in industry-specific business cloud software products. Our strong partnership amplifies the impact of your ERP migrations and implementations. Whether you're looking for a full strategic implementation or maintenance testing services, our experts are here to help.