AI

AgilityAI

AI Discovery & Strategy

Agentic & Interactive AI

AI Development

AI Governance

AI Transformation

Digital Product Engineering (DPE)

Application Development

Application Modernization

DevOps

Product & Platform Strategy

Product Sustenance & Support

Quality Engineering

Cloud

Cloud Engineering

Cloud Migration

Digital Infrustructure

Digital Security

Digital Workspaces

Edge Computing

Infastructure as a Service

AI

Whether you're initiating or advancing AI, Marlabs provides the expertise to maximize its enterprise value. We implement AI solutions in a way that frees people up for more impactful work while simultaneously minimizing human errors in more repetitive processes.

AgilityAI

Marlabs AgilityAI is not just a platform; it is a comprehensive framework for transformation. It addresses the core points-of-failure by providing a framework to align AI to business value, pre-built accelerators to reduce implementation time, and the operational models to deploy AI confidently.

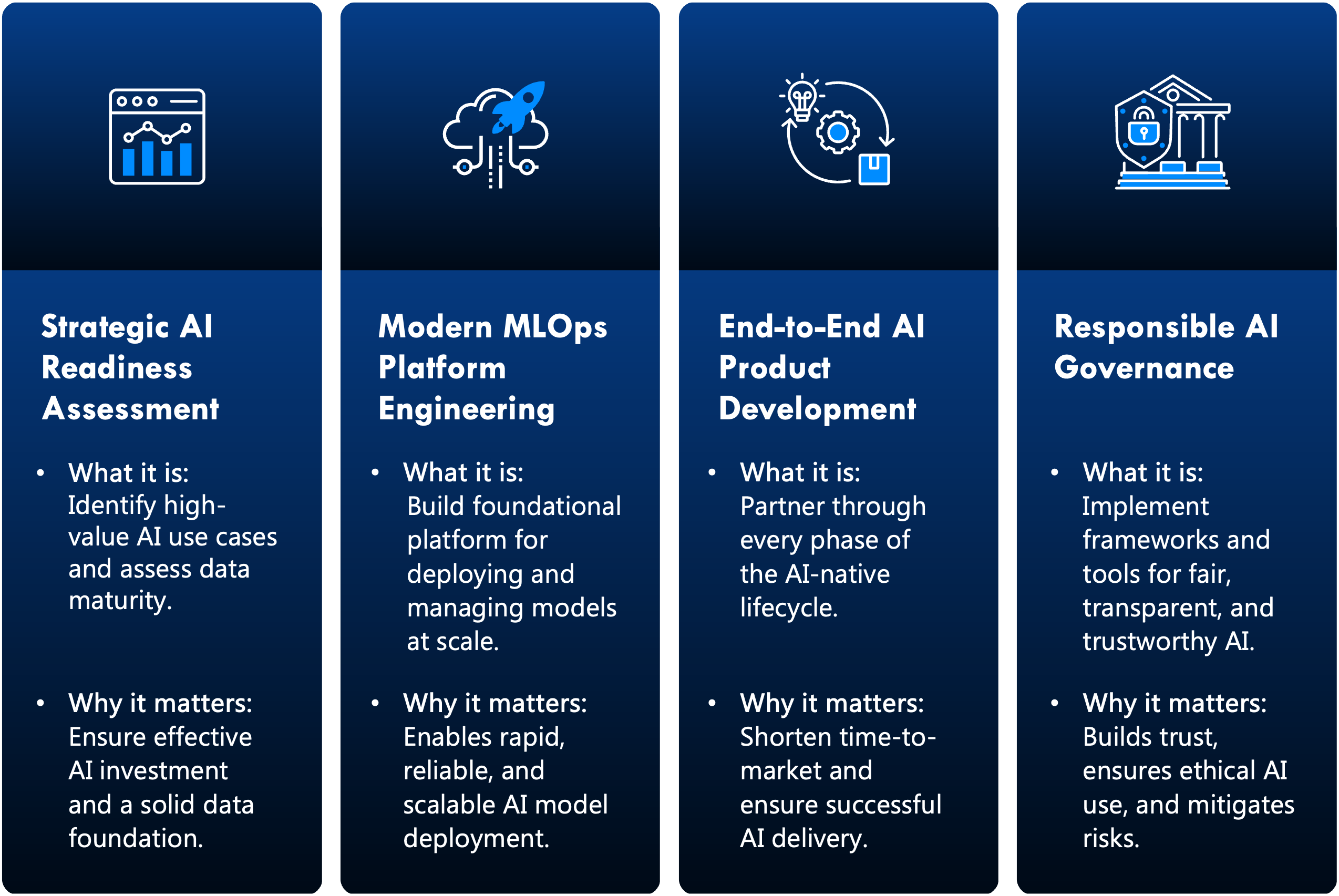

AI Discovery & Strategy

Whether you’re excited or cautious about implementing AI, our discovery service is your perfect launchpad. This comprehensive program equips you with the tools to navigate the exciting world of AI, understand its limitations, and identify how it can transform your business.

Agentic & Interactive AI

At the core of digital workforce augmentation, agentic AI is redefining organizations. We build AI agents that understand your organization, enabling them to boost customer experience and engagement, improve internal decision-making, increase productivity, solve understaffing concerns, and more.

AI Development

Cognitive AI can discover patterns in your data that reveal possibilities for optimization and innovation faster and more accurately than otherwise possible. We have the expertise to implement AI with the proper guardrails for meaningful, expediated results in your quest for knowledge.

AI Governance

Becoming an AI-powered organization requires trust at scale in AI solutions implemented throughout your business. At Marlabs, we embed governance into everything we do with clear, consistent, and reusable policies, along with human oversight and purposeful checkpoints.

AI Transformation

From evaluating AI readiness to reaching AI excellence, we help organizations become AI-empowered through strategic and meaningful transformation. With our AI Evolution Framework, our team serves as a safety net to help you strategize meaningfully, avoid common mistakes, and augment your workforce with AI solutions and agents.

Data

Our team has decades of experience cultivating sustainable, value-driven efforts in data and analytics. Whether you’re just moving beyond department-focused spreadsheets or looking to upgrade your data architecture to discover cutting-edge insights, our team has proven results you can trust.

Data Strategy

At Marlabs, we want every step of our clients’ data journeys to have meaning and impact. That’s why we include more than data when creating a data strategy. We consider technology, processes, people, culture, and team adoption to help you drive data-informed decision-making.

Data Governance

Keeping data clean, consistent, and compliant prepares a foundation for creating data you can trust. As the world of data continues to expand, doing so grows more difficult—and more important. We can structure your data to meet all your business requirements and goals.

Data Management

Marlabs can help you manage your data so that you have a single, trustworthy source of truth for the organization, one that is comprehensive and up to date. In doing so, we also help you break silos and build a data-literate organization.

Data Engineering

Taking your data strategy from plan to execution is a daunting task for any team. With a rich history of successful data migrations, data architecture engineering, and analytics enablement, Marlabs can accelerate your success so you can make data-driven decisions sooner.

Advanced Analytics

We harness the full potential of your data by implementing analytics solutions that point out anomalies, predict customer needs, highlight ways to optimize resources, and so much more. We focus on developing analytics that are actionable and accessible for your team.

Digital Product Engineering (DPE)

Marlabs’ tailored engineering solutions create intuitive digital experiences with high-performing software. We engineer products to be user-centric and to continuously add value in an age where user needs and technological possibilities are ever-changing.

Application Development

At Marlabs, we’re experts in creating innovative, future-ready application solutions. We partner with you to ensure your apps are maintainable and user-centric. As digital needs evolve, we work to continuously deliver new value through iterative development.

Application Modernization

Struggling with out-of-date applications? Looking to build a competitive edge with applications that meets customer and employee needs for automation, AI, and customization? Either way, our team is ready to help you make the transformation into the digital, cloud-focused age.

DevOps

Our DevOps services bring together your teams for software development and IT operations to deliver fast, secure, and reliable software. By orchestrating people, processes, and platforms, we establish clear and collaborative integration and automation solutions.

Product & Platform Strategy

Our scalable and repeatable framework aligns business vision with user needs and technical capabilities. By keeping the whole technology ecosystem in mind and embedding AI directly into the strategy, we create future-ready architectures that are secure and adaptable.

Product Sustenance & Support

Marlabs’ product sustenance and support focuses on anticipating and evolving with growing organizational and customer needs—not just reacting to issues. We make sure your products are always available, always secure, and always optimized with zero business disruption.

Quality Engineering

More than just traditional software testing, Marlabs’ quality engineering relies on cutting-edge, AI-driven testing methodologies and automation frameworks. From initial strategy to continuous improvement, we build quality engineering into every step of product engineering.

Intelligent Automation

As experts in intelligent automation, we take automating tasks to the next level. Our team can help you automate even unstructured repetitive tasks using intelligent and AI-power automation. In doing so, we release your team to more high-value tasks.

IA Digital Governance

We help you take control of your processes by automating governance for security, consistency, and accuracy. By leveraging AI and machine learning, our intelligent automation solutions can provide unparalleled integrity in your data and processes.

RPA

With our robotic process automation (RPA) solutions, Marlabs reduces human error in repetitive processes. In doing so, we enable your people to spend their time and energy on more meaningful tasks. Then, by combining AI and automation, we take your processes to the next level.

Automation Partners

Our team has extensive experience with a variety of automation platforms. While we partner with major industry players to provide the best service possible, we also custom fit all our solutions to our clients’ business needs and objectives.

Experience Design

In the age of digital transformation, human-centered design has never been more important. Whatever stage you’re at, whether its initial ideation or modernization improvements, our team can help build intuitive solutions that drive your business forward.

UI / UX

Our user interface (UI) and user experience (UX) solutions focus on the entire customer journey. We focus on helping you build trust with both internal and external users by providing them with natural and frustration-free interactions with your products.

Ideation & Innovation

Do you know there’s a market need, but you’re not sure what would fill it? Do you have an idea of what you want to do, but you’re not sure how to execute? Our expert consultants use their technical and industry knowledge to guide you through vision casting and design preparation.

Design Services

From ideation and planning to creation and refinement, our team offers services through the full design lifecycle. We develop comprehensive, creative, and thoroughly tested design products that incorporate all your business and customer needs.

Design as a Service

Our design-as-a-service offering takes a flexible and scalable approach to design initiatives. We provide the design support you need, when you need it, so that you can tackle projects with confidence.

ERP & CRM

With our ERP and CRM solutions, you can empower your organization the way you always wanted to. Whether you're looking to migrate to a new system or reinvigorate your current setup, our experts strategically design platforms to unlock possibilities that serve you and your customers better than ever before.

ERP Migration

Ready to make the move to next-gen enterprise systems? Our meticulous digital transformation experts empower users and accelerate ERP migration for rapid results you can trust.

ERP Modernization

In the age of intelligence, sometimes the potentially most powerful systems are actually the ones slowing down business growth. Our team modernizes ERPs to enable real-time insights, intuitive user experiences, and future-ready AI innovation.

CRM Implementation & Integration

With a strategic focus on ROI and revenue results, our team does more than just set up your CRM system. We focus on user adoption and empowerment by building in governance, training, and intelligent automation.

Cloud

As the world becomes ever more digital, cloud infrastructures have never been more important. Whether you’re migrating to a new system, implementing edge computing, or increasing your digital security, our team can help you confidently architect, migrate, optimize, and secure your cloud environments.

Cloud Engineering

Unlock the full potential of cloud‑native architectures with purpose‑built solutions that adapt as your business evolves. At Marlabs, our automation‑driven DevOps pipelines ensure rapid delivery and continuous performance tuning, keeping you agile in a competitive landscape.

Cloud Migration

Don’t let migration complexity slow down your digital ambitions. Through personalized, risk-mitigated roadmaps, we seamlessly transition your workloads to the optimal cloud environment to ensure minimal business disruption.

Digital Infrustructure

Modernize your IT backbone for multi-cloud realities including networks, storage, and compute that scale on demand. With intelligent capacity planning, AI-driven monitoring, and 24/7 maintenance and optimization, we deliver the reliability, flexibility, and efficiency your critical digital operations need.

Digital Security

With security stakes higher than ever, proactive threat detection and zero‑trust controls are non‑negotiable. Our expertise in cloud-native security frameworks and proactive threat management ensures compliance and builds unshakeable trust.

Digital Workspaces

Achieve true workforce flexibility through secure, high-performance virtual desktops and applications accessible anywhere. Simplify management, enhance security, and boost productivity while enabling seamless user experience across your business.

Edge Computing

Deploy computing and analytics closer to your data sources, enabling real-time insights and faster decision-making. We help embed continuous monitoring, identity governance, and automated responses across your cloud estate — balancing iron‑clad defense with frictionless access.

Infastructure as a Service

Ditch the hardware burden and partner with us to access on‑demand compute, storage, and networking resources. Our managed services deliver instant scalability, enhanced resilience, and predictable costs for your core needs.

IoT

Looking to transform your smart devices into intelligent, scalable connected assets? Marlabs builds custom IoT solutions that unlock real-time insights, automate operations, cut costs, and elevate customer experiences.

Platform Development

Think of platform development as the control center of your IoT solution. Our experts will create the software and provide the tools and functionality that connects, controls, monitors, and manages all the components in your IoT ecosystem.

Cloud Backend Development

Marlabs cloud engineers develop high-performance backends that operate reliably, securely, and in real time. We handle and design everything, from receiving the data, to processing it, to analytics and cost optimization.

Infrastructure & Support

Marlabs’ infrastructure services act as the backbone to power your IoT solution. Our team implements and manages the foundational systems that connect your devices to the cloud, monitor performance, and ensure the system’s resilience 24/7.

Device Data Management

We capture, organize, and analyze your data in real time. Using advanced analytics and machine learning, we uncover meaningful insights when it matters most.

Talent Services

Our team of employment staffing consultants are experts in understanding organizational talent needs and organizing the proper strategy and people to fill those needs. With nearly three decades of proven experience, we deliver comprehensive talent solutions with quick turnarounds to help you fill the right roles with the right people.

Talent Solutions

With over 10,000 placements under our belt, our experts in enterprise staffing can help you quickly and effectively find the best fit for your organization's needs. Having served hundreds of clients across dozens of industries, we know how to spot specialized talent that's also a good cultural fit.

Talent Offerings

Whatever your talent needs, our team can help. We can enhance your staffing operations overall, source top-tier and industry-specific talent for specific roles, and help you stay ahead with AI-driven digital workforce augmentation. Not only that, but for long-term, strategic talent planning, our team has also developed a framework to establish Center of Excellence talent labs.

Talent Partnership

Our comprehensive approach to equal opportunity, inclusivity, diversity, and equity goes beyond just connecting people with the right skills to the right roles. We also work with businesses to understand their organizational values and how we can drive innovation, employee engagement, and long-term success by creating an equitable and representative workforce.

Life Science

With over 20 years of industry experience, Marlabs partners with our clients to accelerate and innovate clinical processes. Ensuring safety and compliance, our team can help you develop life-changing products and technologies and increase your market competitiveness.

Healthcare

For 20+ years, Marlabs has helped healthcare organizations deliver quality care to patients while also improving operational and financial outcomes. Through trustworthy data and technology, we support patient scheduling, clinical decision-making, financial reporting, personalized care, and more.

Financial Services

Marlabs has a rich history of helping financial services clients better serve and reach their customers. By unlocking the full potential of customer and organizational data, we drive secure, forward-minded growth through analytics, AI, and digital product engineering implementations.

Manufacturing

With the increasing pressure to modernize, manufacturers turn to Marlabs to develop sustainable digital solutions for performance optimization and supply chain management. Through data management, digital infrastructures, IoT devices, and predictive analytics, we help you stay competitive.

Telecom & Media

As customer demands require increasing customization and digitization, Marlabs helps our telecommunications and media organizations trust their data. In doing so, our clients face fewer security risks, reduce customer churn, and find innovative ways to engage customers.

Databricks

Organizations across industries unleash the power of their data with Databricks. Their innovative solutions scale with your business needs. Marlabs’ of certified Databricks engineers can support you through migration, governance, and implementation to cultivate data and analytics you can trust.

Salesforce

As a Salesforce Platinum Cloud Alliance partner, Marlabs helps you make the most out of your Salesforce investments. From initial implementation and integration to AI additions and maintenance, our team is uniquely positioned to support your Salesforce initiatives.

Financial Services

Part of the AWS Partner Network (APN), Marlabs’ certified experts can meet all your AWS needs. We provide scalable, future-ready AWS solutions for data initiatives, digital infrastructures, cloud migrations, application development, AI implementations, and more.

Microsoft

As an official partner of Microsoft Fabric, Marlabs implements solutions for Azure, Power BI, Data Factory, Power Apps, and Copilot. For over 18 years, we’ve taken clients from strategy through execution for all their Microsoft needs and enabled internal teams to fully adopt their solutions.

Snowflake

With our team of certified Snowflake architects, Marlabs is uniquely positioned to shorten your time to value with Snowflake’s data warehousing. Our architects partner with you to develop a holistic and well-integrated solution that enables you to reach your business objectives.

Perfecter.ai

An official Perfecter.ai partner, Marlabs employs autonomous intelligence through Perfecter.ai AI agents. These solutions are particularly well-known in the financial services industry for offering secure, automatic workflows that slash manual effort by 70%.

Profisee

Marlabs is a certified partner with Profisee, a leader in data management software. Their solutions are tailored for integration with Microsoft environments, and our cross-trained experts are specially qualified to help organizations bring their data strategy to life with these integrations.

ArchLynk SAP

Marlabs’ certified Databricks engineers, together with their expert SAP partners at ArchLynk, bridge the gap between operational and supply chain data within SAP and the innovative data science and AI capabilities of Databricks. Marlabs’ and ArchLynk’s engineers offer a 360-degree view of operational, supply chain, and other enterprise data that update in real time.

Infor

Infor is a global leader in industry-specific business cloud software products. Our strong partnership amplifies the impact of your ERP migrations and implementations. Whether you're looking for a full strategic implementation or maintenance testing services, our experts are here to help.

About

What We Do

Why Choose Marlabs

Awards & Recognitions

Marlabs Executive Team

Frequently Asked Questions

What We Do

Your trusted transformation partner, Marlabs delivers complex, AI-driven technology solutions across industries. Our intelligent solutions span AI, data, analytics, automation, cloud, product engineering, IoT, ERP, CRM, and experience design.

Why Choose Marlabs

With over 2,000 employees, over 100 awards, and nearly 3 decades of experience, Marlabs delivers where others fail. Our cross-functional teams bring clients measurable results through their collaborative and agile approach to strategic execution.

Awards & Recognitions

Across major players like Zinnov Zones, ISG, and Everest Group, among many others, our teams have been recognized as leaders across industries, technical solutions, and consulting services.

Marlabs Executive Team

Our executive team brings together deep industry expertise, bold leadership, and a shared commitment to delivering transformative results for our clients. Guided by a client‑centric mindset and a passion for collaboration, they lead Marlabs with a clear focus on innovation and measurable impact.

Frequently Asked Questions

Want to know more about what we offer, our mission, how we ensure practical results, our industry-specific experience, our engagement models, and our story? Find all the answers and more here.